A Jacksonville teenager, Brooke Curry, became a victim of a pornographic deep fake when an altered nude image of her was shared online without her consent. Like Brooke, many people are becoming victims of deepfakes. You or I can be next!!!!

Why it is very easy to make Deepfakes ?

Well you don’t need to be some kind of technical expert to make deepfakes. Off-the-shelf tools like ElevenLabs, DeepFaceLab, and HeyGen can do the dirty work.

In fact, cybercriminals are continually developing and selling increasingly sophisticated deepfake tools on the dark web — a trend that’s alarming both in scale and danger. As a result, it’s becoming harder than ever to distinguish real from fake.

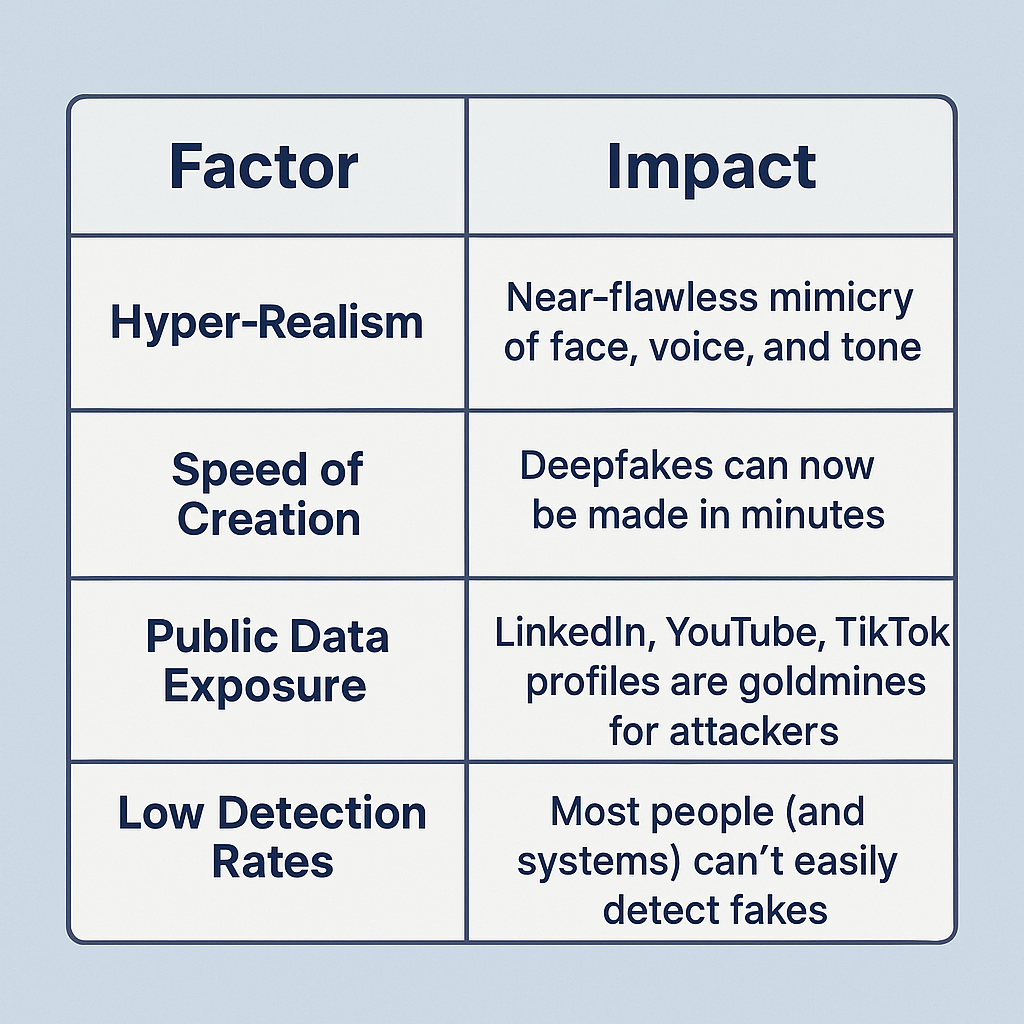

“Why Are Deepfake Scams So Effective?”

(Figure: Key factors driving the rise of deepfake scams)

🛡️ How to Detect Deepfakes

- First thing you would notice in Deepfake videos are they often lacking natural blinking and sometimes inconsistent lip sync.

- Use Deepfake Detection Tools

- Use Deepware scanner (https://scanner.deepware.ai).

- Microsoft Video Authenticator Tool.

- Reality Defender AI (https://www.realitydefender.com) – Works on real time zoom calls.

- Analyze Metadata– You can check for inconsistencies in meta data or sign of tampering.

- Ask Questions AI Can’t Answer– If you suspect a video or call is fake, steer the conversation toward something only the real person would know.

How to Protect Yourself and Your Business

For Individuals:

- 🎥 First, limit your public video content — the less available, the harder it is for attackers to replicate you.

- 🔒Avoid oversharing personal information on social media, especially anything that reveals your voice or appearance. If possible make your profile private.

- 🖋️ Consider using watermarked video tools when posting online. This makes it more difficult for your content to be misused.

For Businesses:

- ✅Implement multi-factor authentication for all financial instructions

- 🗣️ Create a “safe word” system for verifying voice or video instructions

- 🧠 Finally, train employees to recognize and report suspicious behavior or messages

“Your eyes can no longer be trusted. In 2025, your cybersecurity starts with skepticism.” – ByteTheHack.com